Digital Humanities Scholarship Generator

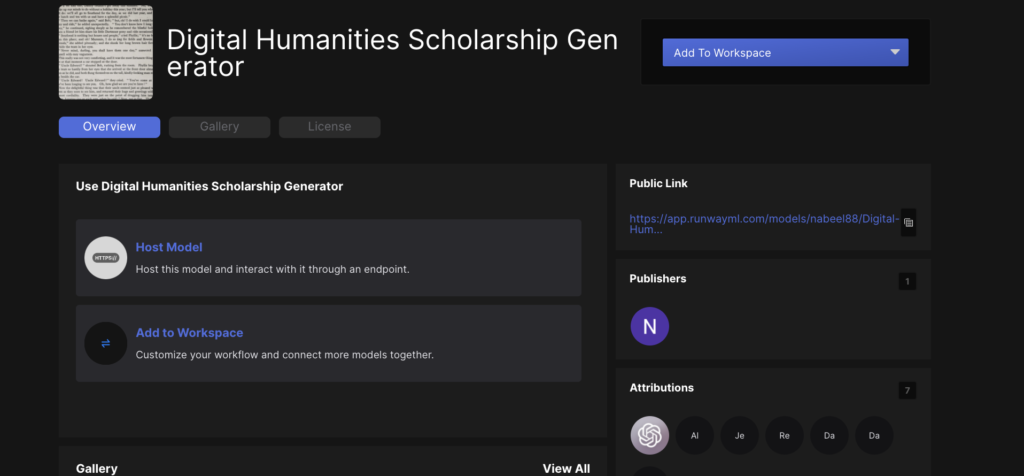

Click Here to Access the Model Using RunwayML

Update: I have also trained a GPT-J model and an updated GPT-2 model with more scholarly examples. However, neural networks can have varying results each time they are run, and I find the original still works the best. If you would like access to any of the other models, please email me.

The Digital Humanities Scholarship Generator utilizes GPT-2, a deep neural network, and was trained on a series of articles from JSTOR on the digital humanities. The model is publicly available on RunwayML, which seeks to make machine learning more accessible. All one has to do is go to the app and look at the publicly available models to begin playing around with it. You can also train it with additional documents.

Developments in natural language processing have allowed digital humanites scholars to ask new questions about literature. These "distant reading" techniques are now increasingly applied to visual culture due to advances in computer vision. Deep neural networks, machine learning, and AI are likely to drive change in the digital humanities moving forward. Yet, digital humanites scholars have yet to utilize generative models as a means of asking new questions. For instance, what does it mean to "speak like a 19th century American?" if we no longer confine ourselves to studying literary texts as distinct entities. Utilizing deep learning models, we can now "feed" all 19th century American newspapers and create artificial text that matches the conglomeration of literary output throughout the century—I am currently working on a project to do just this. We have yet to explore how these generative models challenge our notions of scholarship, agency, textual analysis, and digital media.

Scholarship generators, such as the Postmodern Generator and SciGen, have been particularly controversial. While AI writes numerous "breaking news articles," researchers still view their intellectual labor as distinct and safe from computational advancements. Previous generators have failed to produce believable papers but also rely on older technologies.

This Digital Humanities Generator seeks to understand how a singular community, such as the digital humanities, should respond to scholarly generators. Rather than seeing them as problematic, I embrace scholarly generation as a form of creativity that provides not only epistemological challenges but also affordances.

Due to some cleaning up issues, the model tends to randomly create "headers" that existed at the top of OCR'd pages and references. These can usually be ignored. However, I am currently in the process of training a larger scholarly dataset with both GPT-J and GPT-2. Both models have their advantages, although GPT-J usually produces better results. As mentioned earlier, I am also working on creating new models with different datasets (such as 19th century newspapers) to understand literary trends.